TL; DR:

hexagonal architecture is a fabulous pattern that has more advantages than the

ones for which it has been originally created. One can think in an orthodox

vision that patterns do not evolve. That it is important to keep Alistair

Cockburn’s pattern like it was described back in the days. One can think that

some patterns may evolve, that Hexagonal Architecture has more facets than we

think. This article discusses and compares these 2 approaches, takes an example

with one new facet related to DDD, before asking an important question to its

creator and the dev community at large (at least important for the author of

this post ;-)

First Adapters were technological

Hexagonal

architecture has been initiated during a project that Alistair Cockburn had

done once related to a weather forecast system. His main objective seemed to

support a great number of different technologies to receive weather conditions

as inputs but also to connect and publish the results of their weather forecast

towards to a huge number of external systems.

This

explains why he found the concept of interchangeable plugins (now we say ‘configurable

dependencies’, following Gerard Meszaros) for technologies at the borders of

the app, and a stable application-business code inside (what Alistair called

the “hexagon”).

The

pattern had some traction by the time (see the amazing GOOS book: http://www.growing-object-oriented-software.com

talking about it for instance) but it is after more than a decade after that a

community took back this pattern out from its ashes ;-) and made it the new way

to be explored deeper. This community was gathering people who wanted to focus and

foster more on the business value of our software (rather than pure tech

fads): the Domain Driven Design (DDD) community.

Then came the DDD practitioners

As a

member of this DDD community, I found the pattern very interesting for many

other reasons. But the main one being the capability to protect and isolate my

business domain code from the technical stacks.

Why should I protect my domain code from the outside world?!?

I

still remember that day in 2008 when I witnessed a bad situation where a major

banking app had to be fully rewritten after we have collectively decided to ban a

dangerous low latency multicast messaging system (I was working for that bank at

the time). We had taken that decision because we were all suffering from

serious and regular network outages due to multicast nack storms. We were in a

bad and fragile situation where any slow consumer could break the whole network

infrastructure shared by many other applications and critical systems of the

bank. Sad panda.

Why couldn’t this dev team just switch from one middleware tech to another?

Because their whole domain model was melted and built with that middleware data

format at its core (i.e. instances of XMessages everywhere in their core domain

😳).

Moreover,

the entire threading model of this application was built upon the one from the

low latency middleware library. In other words: the need of being thread-safe

or not in their code was depending on the premises and the things that were

guaranteed so far by the middleware lib. Once you removed that lib, you

suddenly lost all these thread-safe guarantees and premises. The whole

threading model of this complicated application would have vanished, turned the

app unusable with tons of new race conditions, Heinsenbugs and other deadlocks.

When it’s an app that can lose Millions of Euros per minute, you don’t play

this decision at head or tail ;-)

I’m

rarely proponent of rebuilding from scratch and often prefer the refactoring of

an existing code that already brings value. But in that case where everything

was so entangled... it was really the best thing to do. But I let you imagine

how selling this kind of full reconstruction to the business was challenging...

Anyway.

I wanted to tell you this story in order to emphasize that splitting your

domain code from the technology is a real need. It has real implications

and benefits for concrete apps. It’s not a simple architect coquetry.

I’m not even talking about the interesting capability to switch from one techno

to another in a snap as needed by Alister. No. Just a proper split between our

domain code and the infra-tech one.

It’s

crucial. But it’s just the beginning of this journey.

Models Adapters

Indeed.

With his pattern, Alistair was trying to protect and keep his application

domain code stable in front of the challenge of having a huge number of

different technologies all around (reason why I talked about Technologist

Adapters earlier). But splitting and preserving our domain code from the

infrastructure one may be not enough.

As

DDD practitioners, we may want to protect our domain code from more than that.

As

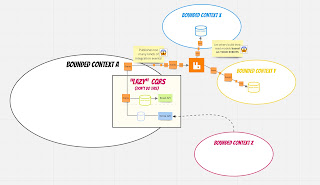

DDD practitioners we may also want to protect it from being polluted by other

models and external perspectives (one can call them other Bounded Contexts).

>>>>>

The sub part in grey below explains the basics of DDD you need to understand

the end of the post. You can skip it if you already know what are Bounded Contexts

(BCs) and Anti-corruption Layers (ACLs).

Bounded Contexts?

A

Bounded Context (BC) is a linguistic and conceptual boundary where words,

people and models that apply are consistent and used to solve specific Domain

problems. Specific problems of business people who speak the same language and

share the same concerns (e.g. the Billing context, the Pre-sales context...)

DDD

recommends for every BC to have its own models, taylor-made for its own

specific usages. For instance, a ‘Customer’ in a Pre-sales BC will only have

information like Age, socio-professional categories, hours of availability and

a list of products and services we already bought etc. Whereas a ‘Customer’ in

the Accounting-Billing BC will have more specific information such as Address,

Payment method etc. DDD advises us to prevent from having one ‘Customer’ model

only for every BC in order to avoid conflicts, misinterpretation of words and

to allow polysemy between 2 different BCs without incidents.

The

interesting thing with the Bounded Context of DDD is that they can be designed

autonomously comparing to the other ones (reason why some people are saying that

DDD is a way to do Agile architecture).

DDD

also comes with lots of strategic patterns in order to deal with BCs

relationships. One of them being the famous Anti-Corruption Layer (aka. ACL).

Anti-corruption

Layer FTW

As I

already explained in another post, the Anti-Corruption Layer is a pattern

popularized by the DDD which allows a (Bounded) Context not to find itself

polluted by the inconsistency or the frequent changes of a model coming from

another Context with which it must work.

>>>>>

An

ACL is like a shock absorber between 2 Contexts. We use it to reduce the

coupling with another Context in our code. By doing so, we isolate our coupling

in a small and well protected box (with limited access): the ACL.

Do

you see where I am going with this?

ACL

is a kind of Adapter. But an Adapter for Models, not purely technological

stuff

As I already wrote elsewhere, an anti-corruption layer can be implemented in various

forms.

At least 3:

- external gateway/service/intermediate API

- dedicated in-middle database (for old systems)

- or just an in-proc adapter within a hexagonal

architecture

And

this is the last version that will interest us for the rest of this post.

Indeed,

the [technological] adapters of Alistair’s first description of his pattern may

be a sweet spot for the [models] adapters we need too when we focus on languages

and various models (like when we practice DDD).

And

if we do agree on the occasional need of having Models Adapters (aka. ACL) in

our Hexagonal Architectures, we can start discussing the options and the design

tradeoffs

ACLs in Hexagonal Architecture, OK. But where?

The

next question we may ask ourselves is: where to put our ACL code when we want

to have it within our Hexagonal Architecture? (I.e. the last choice of the

three presented above). There have been debates about it on Twitter recently.

And the main question was:

Should

we put the ACL outside or inside the hexagon?

As

an important disclaimer, I would say that there is no silver bullet nor unique

answer to that question. As always with software architecture, the design

choices and the tradeoffs we make should be driven by our context, our

requirements and set of constraints (either technical, business, economical,

sourcing, cultural, socio-technical...).

That

being said, let’s compare these 2 options.

Option 1: ACL as

part of the Hexagon

Spoiler

alert: I’m not a big fan of it. To be honest, been there, done that, suffered a

little bit with extra mapping layers (new spots for bugs). So not for me

anymore. But since it has recently been discussed on twitter, I think it’s

important to present this configuration.

This

is the ‘technological’ or the orthodox option if I dare. The one saying that

driven Ports and Adapters on the right-side should only expose what is

available outside as external dependencies. And to do it without trying to hide

the number nor the complexity of what it takes to talk or to orchestrate with

all these external elements.

We

usually pick that option if we consider that coping with other teams’

structural architecture is part of our application or domain code. Not the

technical details of them of course. But their existence (i.e. how many

counterparts, APIs, DBs or messaging systems do we need to interact with).

And

for that, we necessarily need a counterpart model in our hexagon FOR EVERY ONE

OF THEM!

Why?

Remember, we don’t want our hexagon to be polluted by external technical DTOs

or any other serialization formats. So, for every one of them, there will be an

adaption (in the proper driven Adapter) in order to map it with its

non-technical-version. The one we need for our hexagonal code to deal with it.

An Hexagonal-compliant model (represented in blue in my sketch above).

It’s

important here to visualize that our ‘Hexagonal code’ in this option, is composed

by the Domain code + the ACL one (but an ACL that won’t have to deal with

technical formats).

Why I abandoned this option over the years with my projects

To

conclude with that first option, I would say that there are 2 main reasons why

I abandoned it in lots of contexts:

It forces us to create 1 non-technical-intermediate

model (in blue) for every external dependency (leading to 5 different

models in our example). This is cumbersome, and bug-prone. I saw lots of

devs being tired of all those extra layers for a very limited benefit

(i.e. just to follow Hexagonal Architecture by the book)

It opens the door for junior devs or newcomers to

introduce technical stuff within our hexagon. “But... I thought it was ok

since we already have an ACL in that module/assembly?!?” It reduces the

clarity of the domain-infra code duo.

These

are the reasons why I progressively moved over the years towards another

tradeoff. A new option which is one of my favorite heuristics now.

Option 2: ACL within a driven Adapter

This

option consists of putting our ACL code into one or more adapters.

If

we think that it makes sense to replace 2 different Adapters into one ACL

Adapter doing the orchestration and the adaptation, we can even avoid coding

the intermediate layers we previously had for every Adapter (in blue on the

option 1 diagram). It means less plumbering code, less mapping and less bugs.

When

something changes in one of the external backends used by the ACL Adapter (let’s

say a pink square), the impact is even reduced comparing to the Option 1.

Indeed,

all you have to change in that situation is your ACL code adapting this

external backend concept to one of your domain code's (black circles on the

diagrams).

With

option 1, you will have more work. You will also have to change the

corresponding intermediate data model in blue (with more risk of bugs in that

extra mapping).

Clarifications

As I

am not an English speaker (one may have noticed ;-P I think it is worth

clarifying several points before concluding:

I’m not saying that one should always put ACL in our

hexagonal architecture

I’m saying that if you need to have an ACL in your

hexagonal architecture, you should definitely use the sweet spot of the

Adapters to do so.

I’m saying that in some cases, you can even merge 2

former Hexagonal Adapters into a unique one that will play the ACL role

I always want my ports to be designed and driven by my

own Domain needs and expressivity. I don’t want my domain code to use infrastructure or someone else external concepts that should not bother my domain logic. In

other words: Putting a driven port for my domain concept, instead of

putting a driven port for each external system is not a mistake. It’s an

informed decision.

To conclude: hexagonal or not hexagonal?

When

Alistair created his pattern, his main driver was to easily switch one

technology with another without breaking his core domain code. Adaptability was

his main driver and big variance of technologies was his challenge.

I

call this the "technological facet" of the pattern, recently confirmed by

Alistair on twitter:

“The

Ports & Adapters pattern calls explicitly for a seam at a

technology-semantic boundary” (https://twitter.com/totheralistair/status/1333088400459632640?s=21)

But

one of the keys to the pattern's success in my opinion was Alistair's lack of

detail in his original article. We all saw value in it, but almost everyone

struggled to understand it. Almost all of us have had our own definition of

what a port is and what an adapter is for years (you can open some books if you

want to check that out ;-)

This

fuzziness allowed some of us to play with it, freely to discover new facets or

properties from it.

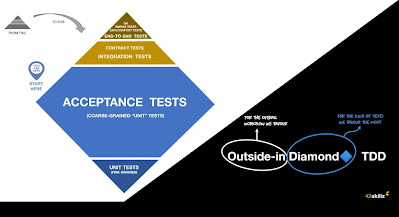

One may discover that Ports and Adapters were top notch for

testing (the "testability facet"). Another one may discover that Ports and

Adapters were truly awesome to properly split our domain code from the

infrastructure one (the "tactical DDD facet"). Another one may find out that it

may help to reduce layering and complexity of our architecture (the "simplicity

facet"). Another one may realize that the initial premise of the Pattern was

rarely the reason why people were using it. That the ports were often leaky abstractions preventing you to properly cover multiple technologies behind (the

devil is in the detail). One may find it interesting for having a good time to market and quick feedbacks about what is at stakes (the "quick feedback facet"). One may find it intersting to postpone architectural decisions at the right moment (the "late architectural decisions facet"). One may find it interesting to Adapt more than

technologies. To adapt not only technologies but also external models, like I

described here (the "strategic DDD facet").

Even

Alistair evolved and changed his mind over the years about important things

such as the symmetry or the absence of symmetry of his Pattern (now we all

know that the left and right side are asymmetrical ;-)

A multi facets pattern?

I

personally think that the beauty of this pattern stands on our various

interpretations and implementations.The fuzziness of the original Hexagonal Architecture article from Alistair also has something in common with Eric Evan's Blue Book. It’s so conducive to various interpretations that it ages really well.

Like

the image of the Hexagon itself, it’s a multi facets pattern. Maybe richer and

more complex than Alistair realized it so far.

My

intent here is to ask Alistair and every one of you in the DEV community:

should we keep talking about Hexagonal Architecture and its multiple facets, or

should we start finding new names for some of those facets and awesome

properties?

I’m

more than keen to have your answers.